This guide contains advice on how to run a panel-based assessment of policy and other advice papers after their delivery (an ex-post assessment process) using the Policy Quality Framework. It also explains how to score advice papers using the Policy Quality Framework's paper-scoring template. The template sets out the Policy Quality Framework's standards in a format that helps in assessing the quality of an agency's written advice, and whether it is fit for purpose.

This PDF is editable and can be completed electronically, or printed blank and then filled out by hand. Please download the PDF and open it from your computer before using and printing, as it contains pages in both A4 and A3 that may not print correctly from the web browser.

The HTML is provided for accessibility purposes. Please do not print the HTML or use it as the primary source for this information. The PDF is the primary product and should be used for printing and distribution.

Formats

About this guide#

This guide contains advice on how to run a panel-based assessment of policy and other advice papers after their delivery (an ex-post assessment process) using the Policy Quality Framework. It also explains how to score advice papers using the Policy Quality Framework’s paper scoring template. The template sets out the Policy Quality Framework’s standards in a format that helps in assessing the quality of an agency’s written advice, and whether it's fit for purpose. This guide's purpose is to support government agencies in understanding, reporting and improving their policy advice performance.

Policy Quality Framework: standards for quality policy advice#

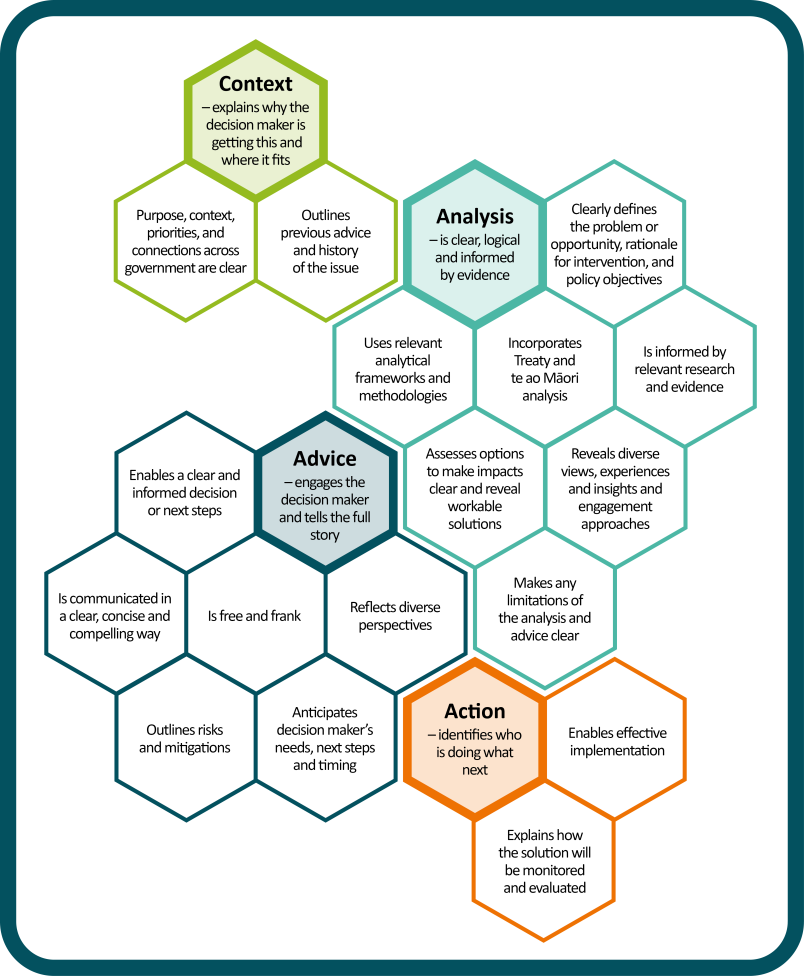

The framework sets out seventeen elements of quality policy advice, organised under four standards: Context, Analysis, Advice, and Action. These standards can be applied by panels to assess papers and decide whether they are fit for purpose. See the full version of the Policy Quality Framework, which provides more detail on each of the elements below.

Why assessing policy advice is important#

Public accountability#

All government agencies are required to report the quality of their advice using the Policy Quality Framework in their Estimates and annual reports. This requires an agency to assess the quality of the advice it delivers during the year. Agencies choose to do this by either establishing their own assessment panels or contracting out the assessment process.

Continuous improvement #

Assessing papers is key to improving the quality of advice and supporting better government decision making. At the individual agency level, the assessment process provides an opportunity to:

- understand the agency’s strengths, identify areas for improvement, and provide a basis for improvement

- identify how policy advice performance in a given period compares with performance in the past

- develop and implement action plans to improve future performance.

At a system level, tracking agency performance results on a consistent basis enables reporting on the state of the policy system, comparisons between agencies, and overall opportunities for improvement.

Who assesses papers and when?#

All agencies with policy appropriations need to determine who assesses the quality of their policy advice after delivery, on the basis of what works best in their circumstances. Assessments may be undertaken by an internal panel or by independent external assessors (or a combination, such as a panel with some external membership). Smaller agencies who prefer a panel approach may find it helpful to match themselves up with bigger agencies or opt into a cross-agency panel. The Policy Project can be approached for contacts for quality assurance panels. Shared panels offer the chance to share best practice and learning.

While the results of quality of advice assessments are reported externally annually, the assessments may be undertaken once a year, or more regularly (such as six monthly or quarterly). Assessing quality of advice performance more regularly provides an opportunity to assess how well new initiatives and practices are being used, and what difference they’re making to the quality of advice. Assessing advice papers several times throughout the year also helps distribute the work to avoid the busiest times.

Other resources to support the development of quality policy advice#

In addition to this guide, the Policy Project has a range of online resources to help agencies develop quality policy advice. They include:

- a checklist for reviewing papers in development to support authors when developing their advice, and peer reviewers when reviewing the advice of others

- Start Right for helping policy practitioners and managers initiate successful projects

- the Policy Skills Framework and the Development Pathways Tool for helping practitioners identify the knowledge, applied skills, and behaviours they would like to build, and practical actions for building them

- the Policy Methods Toolbox for helping policy practitioners identify and select an appropriate approach to their policy initiative.

How to set up a quality of advice assessment panel#

Panel size and membership#

For an internal or cross-agency panel, three to five assessors will usually be adequate. Larger panels can be costly in terms of time, and difficult to coordinate.

Having a mixture of internal and external panel members can be beneficial. While external members aren’t required, your agency might see benefit in bringing in an external chair or subject matter expert. External panel members are likely to provide a fresh set of eyes and bring other elements of good practice to the panel process. Panel members could also include representatives from other agencies in the same sector, or with similar functions.

It's good to have some continuity in panel membership or chairing. Distributing panel expertise is also important, so you may want to rotate some panel members.

Internal panel members have a key role in promoting quality advice, performance improvement and best practice. Panels may also choose to invite less experienced policy staff to attend as observers, as this is likely to provide them with a good learning opportunity.

Ensure all members of your panel have appropriate security clearances if they’ll be asked to review any classified material.

Panel roles and expertise#

The roles and required expertise of the panel chair, members, and support people are as follows:

Chair

- Needs to have credibility and considerable experience in policy advice.

- Reviews and scores papers as a panel member.

- As the panel moderator, ensures that the assessments are consistent and fair across all papers, and must be able to make decisions when there are conflicting opinions between panel members on papers and what they should be scored.

- May take on the task of writing up results, or may delegate this to an administrative support role.

Panel members

- Must be skilled in giving concrete, constructive feedback on policy papers.

- Must have time to commit to the panel and be supported by their manager to participate.

- Individually review papers, share views on papers’ strengths and weaknesses relative to the Policy Quality Framework standards, and collectively score papers.

Panel support

- Providing support to the panel can be a good development opportunity for less experienced policy staff members. Consider involving an analyst or advisor to take notes, or assist in writing up reports and feedback summaries.

- Support will be required to help select the sample of papers, distribute papers, organise meetings, and seek the background and context on papers if needed.

- Download the checklist for panel administrators which will help with setting up the assessment process.

Before the panel meeting#

Provide this guide to panel members#

Give this guide to the chair and panel members when they’re appointed to their roles and take them through it. It’s important they can become familiar with all aspects of it – especially the full version of the Policy Quality Framework and the paper-scoring template. The template provides a structure for panel members to record the results of their assessments. New panel members will also benefit from being provided copies of previous reviews.

We recommend documenting your panel process, including a terms of reference setting out the roles and responsibilities of the chair and panel members. This will help with inducting new members, new panel support people, and new chairs. It will also help with consistency from year to year.

Decide on suitable papers for assessment#

During the relevant period, a randomly selected sample from the population of papers delivered to a decision maker by an agency (or agencies jointly) should be assessed. The sample needs to be stratified to cover a mix of different types of papers from different policy teams and across different Votes/ministers. All papers must have a policy element.

There’s some judgement to make on whether or not papers include an element of policy, and should therefore be considered for review. We would expect papers that provide advice and seek policy-related decisions to be subject to the Policy Quality Framework, but you’ll have to use your judgement about other types of papers. For example, a brief for a meeting with stakeholders that discusses policy issues or an A3 on findings of a research project which has a direct link to policy issues under consideration should be included. But an appointments paper or an aide memoire updating on operational issues may not.

For a broad range of papers we recommend including:

- ministerial briefings

- Cabinet papers

- Regulatory Impact Statements

- aide memoires for decision makers (e.g. advice for a Cabinet or ministerial meeting)

- decision papers prepared for senior leadership teams.

- meeting or event briefs for ministers

- A3 or PowerPoint slide pack reports.

Some agencies have produced their own guidelines on compiling the sample. This helps to ensure continuity from year to year.

Outside the random sample, you may also like to add other papers that you would like to be reviewed (e.g. a specific suite of papers associated with a particular policy issue, or particularly critical and important papers which have not been picked up in the random sample). The additional papers would not be included in the sample scores.

Agencies might want to consider how they can document papers eligible for assessment throughout the year as they’re delivered. This would make it easier to identify the population of papers from which the sample for assessment could be drawn.

Try to make papers available at least two to three weeks before the panel convenes. Give assessors adequate time to read all the papers and undertake a preliminary assessment of each using the paper-scoring template in this guide.

Determine the sample size#

The appropriate sample size will depend on the population of papers an agency is drawing from. For this reason, there is no strict guidance on the number of papers that should be assessed. Agencies will need to choose a big enough sample size of their policy papers to provide a meaningful measure for each ministerial portfolio. This is also done to ensure that feedback is available on various paper types and across different teams in order to maximise the impact of the feedback. For larger agencies, we suggest a minimum sample size of at least 50 papers; for medium agencies, 20 to 40 papers; and for smaller agencies, at least 5 to 10 papers. The sample size should be proportionate to the number of papers prepared by an agency from one year to the next. It needs to be practical, as well as being statistically significant.

Learn about the context of papers#

It may be helpful for the assessors to hear about the context of the paper – verbally or in writing. This information can be included with the pack of papers for assessors – based on some quick feedback from the manager responsible for the paper. In some cases, panels may want the author or their manager to be part of the assessment discussion. If so, the appropriate lead contact for each paper should be identified.

Contextual information could include whether the paper was part of preliminary, mid-stream or final advice, or whether important information was provided in other written advice (e.g. the problem may have been diagnosed in an earlier paper). It’s helpful to be clear who the audience for the paper is (e.g. a particular minister, group of ministers, or Cabinet) and how familiar the audience is with the subject matter.

It’s also worth explaining any directions that have been given, or previous decisions that impact on the scope of the paper (e.g. limits on particular options). We’d expect these to be covered in the context section of papers themselves as per the Policy Quality Framework, but at times the situation can be complex, so it’s helpful for panels to understand. This information will help assessors judge which quality standards and characteristics are relevant to the paper.

It may also be helpful to discuss constraints to quality, such as:

- time and resources

- the experience of the primary author

- familiarity with the subject matter

- available evidence

- perceived constraints to providing free and frank advice.

Understanding such constraints can be helpful in deciding the best way to frame panel feedback on the paper, but shouldn’t affect a paper’s score (see the section below Reaching a collective score for a paper).

How panel members should use the paper-scoring template before the panel meets#

Before a panel meeting, individual panel members should use the paper-scoring template to record their preliminary assessment of each paper they read.

The template provides:

- space to work through each of the four standards – Context, Analysis, Advice, and Action – and the more detailed elements that sit within each standard (you can tick yes, no, or N/A as applicable)

- space for comments on key aspects of the paper overall (strengths and weaknesses, and what could have been done differently to improve the paper)

- space for an overall rating of the paper, out of five.

Depending on the type of paper, not all of the elements of the standards will be relevant. You may want to mark these as ‘not applicable’ when completing the template for a specific paper.

As you work your way through a paper:

- assess it against the four standards of quality advice in the assessment template

- write brief comments about what specifically was done that met a standard well or represented best practice

- write brief comments about what specifically could have been done differently to meet the standard.

It may be that some aspects of the standards have been done very well but not so well in others. This needs to be considered in light of the type of paper and its purpose when deciding on the score.

Review the results to identify which aspects of the paper you think are its greatest strengths and weaknesses. These are the matters you will later raise in discussion with others who have assessed the same paper. Individual panel members’ comments will provide useful content for the narrative element of the panel’s report on each paper. The panel discussion will, in turn, help the panel to later reach a collective judgement on the overall numeric score

to award the paper out of 5.

Panel meetings#

Purpose of panel meeting#

The purpose of the panel meetings is to undertake a collective assessment process that:

- reaches agreement about the overall numeric score for each individual paper in a way that reflects the strengths and weaknesses in the quality of advice they provided

- identifies overall patterns of strengths and weaknesses in the quality of advice provided by this sample of papers, and specific areas for the organisation (or organisations within a cross-agency panel) to target for improvement in future.

The discussion on characteristics and the score is useful, as different members of the panel bring different perspectives.

We recommend that if a panel member has been actively involved in the development of a paper, they should abstain from scoring the paper and from the panel discussion. They may even want to leave the room, so as not to constrain other panel members in their discussion.

Reaching a collective score for a paper#

At panel meetings, you should discuss individual papers by working through the following steps:

- Consider how the paper performs against each of the Policy Quality Framework’s four high-level standards (and all of the seventeen more specific elements that apply).

- Compare notes on what individual panel members consider to be the strengths and weaknesses of the paper.

- Identify what the author could have done that would have improved the ratings.

- Collectively agree an overall score out of 5, applying the scoring scale below.

When scoring advice papers, don’t adjust scoring based on constraints to quality (e.g. the paper was prepared under extreme time pressure). You will want to note these, but they should not affect the score. Assessors may want to reflect on which constraints most affect the quality of papers overall and any recurring themes.

Scale for scoring the quality of advice#

The following scoring scale is to be used when awarding a score out of 5 for each paper. Half points can be awarded where a paper falls between two points on the scale. For example, a paper could score a 3.5 if it had several elements of good practice (in the definition of a 4), but also had some areas for improvement (in the definition of a 3).

1 = Unacceptable

Does not meet the relevant quality standards in fundamental ways

- Lacks basic information and analysis

- Creates serious risk of poor decision making

- Should not have been signed out

- Needed fundamental rework

2 = Poor

Does not meet the relevant quality standards in material ways

- Explains the basic issue but seriously lacking in several important areas

- Creates risk of poor decision making

- Should not have been signed out

- Needed substantial improvement in important areas

3 = Acceptable

Meets the relevant quality standards overall, but with some shortfalls

- Provides most of the analysis and information needed

- Could be used for decision making

- Was sufficiently fit-for-purpose for sign-out

- Could have been improved in several areas

4 = Good

Meets all the relevant quality standards

- Represents good practice

- Provides a solid basis for decision making

- Could have been signed out with confidence

- Minor changes would have added polish

5 = Meets all the relevant quality standards and adds something extra

- Represents exemplary practice

- First-rate advice that provides a sound basis for confident decision making

- Could have been signed out with great confidence

- A polished product

Moderating paper scores, relative to one another#

It’s important to undertake a moderation process before finalising the numeric scores for each paper. The idea is to get a consensus on the scores of each paper – as much as is possible. Taking the following steps will help ensure that papers of a similar quality are scored the same, while papers of markedly different levels of quality are appropriately scored differently:

- List the names and scores of all the papers assessed where everyone can see them (e.g. on a white board, flip chart or screen).

- For each sub-group of papers with the same score, discuss whether they really merit the same score, or whether one or more was markedly better or worse than the others and hence should be scored differently.

- Discuss whether the outliers (papers with very high or very low scores) are really so much better or worse than the other papers that they really merit such high or low scores.

- Where assessors have also taken part in a previous assessment round, discuss whether those papers awarded a given score in this round are of comparable quality to those awarded that score in the previous round.

- In light of any discrepancies in the initial numeric scores revealed during the above moderation discussions, revise individual paper scores so that there is internal consistency – both within this assessment round and if possible, between assessment rounds.

After the panel meeting#

Panel commentaries on individual papers and overall themes#

The role of the assessment panel is to drive policy improvement. In addition to scoring each paper, the panel should provide a brief written commentary on each paper – this should identify what was done well and make suggestions for improvement.

The panel should also report on themes for improvement across the papers, and could make recommendations for changes in practice, areas for further guidance, and development across the organisation.

Panel assessments are also a valuable opportunity to identify exemplars of what was done well and what to avoid.

The panel’s report should also track policy performance by producing a tabular summary of statistics. The panel can also graph the distribution of quantitative scores for a given year and compare them with previous years if they are available.

A good way of driving the focus on policy quality improvement is to have a Policy Quality Improvement Plan. The impact of progress on elements of the Plan can be assessed using the results of the review. Findings of the reviews can also drive updates to the Plan and new areas of focus.

Feedback to authors#

Timely feedback to authors and managers has the most impact on improving policy practice. It means that the issues may still have relevance and currency, and managers and authors are more likely tobe still in their positions. This might mean that reviews are undertaken several times during the year rather than waiting until the end of the year. A variety of approaches are adopted by different agencies from quarterly, half-yearly, or just annually.

Consider holding individual feedback sessions with authors, managers, and peer reviewers on your findings about the quality of advice. Look for opportunities to talk through the panel’s assessment of the paper, main strengths and weaknesses, and how it could have been improved.

The most helpful feedback sessions for authors focus on discussing the overall paper before moving on to scores, with an emphasis on the positives and any concrete suggestions for improvements. Remember the author will have put a lot of care into their paper.

This learning opportunity is worth incorporating into your process for panels and assessments.

Optional external review#

After you’ve run an internal panel assessment, you may also choose to undertake an external review to validate and benchmark your internal processes for reviewing papers. An external reviewer may also have useful ideas for improvement.

An external reviewer would usually assess and score a random sample of the papers that your internal panel has previously reviewed. Moderation of your papers’ final scores would then take place, with the panel chair taking into account the external reviewer’s scores and their relativity to the internal panel’s scores.

Reporting agency performance#

We recommend that agencies use the following two targets to report on overall performance:

- An average score. For example, this could be that the average score for papers that are assessed is at least 3.5 out of 5.

- A distribution of scores to show the percentage of papers that exceed, meet, or don’t meet the performance target that has been set. For example, this could be that 70% of assessed papers score 3 or higher, 30% score 4 or higher, and no more than 10% score 2.5 or less.

Reporting on both an average target for policy quality and distribution targets will provide a better reflection of an agency’s performance. Relying on one or the other may not give a true indication of how an agency has performed over time. It’s critical to track the proportion of papers that met the standards (i.e. scored a 3 and above). An average score helps to give information about the balance of the distribution of scores.

Getting a complete picture of agency policy performance#

The assessment panel’s findings are only one of the sources of information about the quality of advice. Policy leaders may also consider the results from the Ministerial Policy Satisfaction Survey, an assessment of the policy function’s capability using the Policy Capability Framework, and the skills of the policy team using the Policy Skills Framework, for example.

This information can be used to develop policy quality improvement plans and initiatives.

Policy improvement across the policy system#

Our intention is that the information collected from agencies’ performance reporting will enable the Policy Project to reach a system-wide view of policy performance. This will show how agencies are performing relative to one another and the overall system.

Communicating lessons learned for the agency#

Agencies should think about how they can share quality of advice findings with policy teams across the agency. You may want to publicise and celebrate good practice and achievements.

Key messages about areas to improve with links to resources can be helpful.

Consider if there are recurring constraints to quality, and if so, what your agency can do to mitigate them.

If you are staggering assessment panels across the year, consider tracking trends. For example, if the same weaknesses are recurring every quarter, stronger actions may be needed to improve in these areas. You can also track whether new initiatives or practices to improve policy quality are actually making a difference.

You can use results to track the effectiveness of any policy quality improvement initiatives or plans.

If you identify negative trends or deficits that put the agency’s reputation at risk, these should be escalated to senior leaders and organisational development teams.